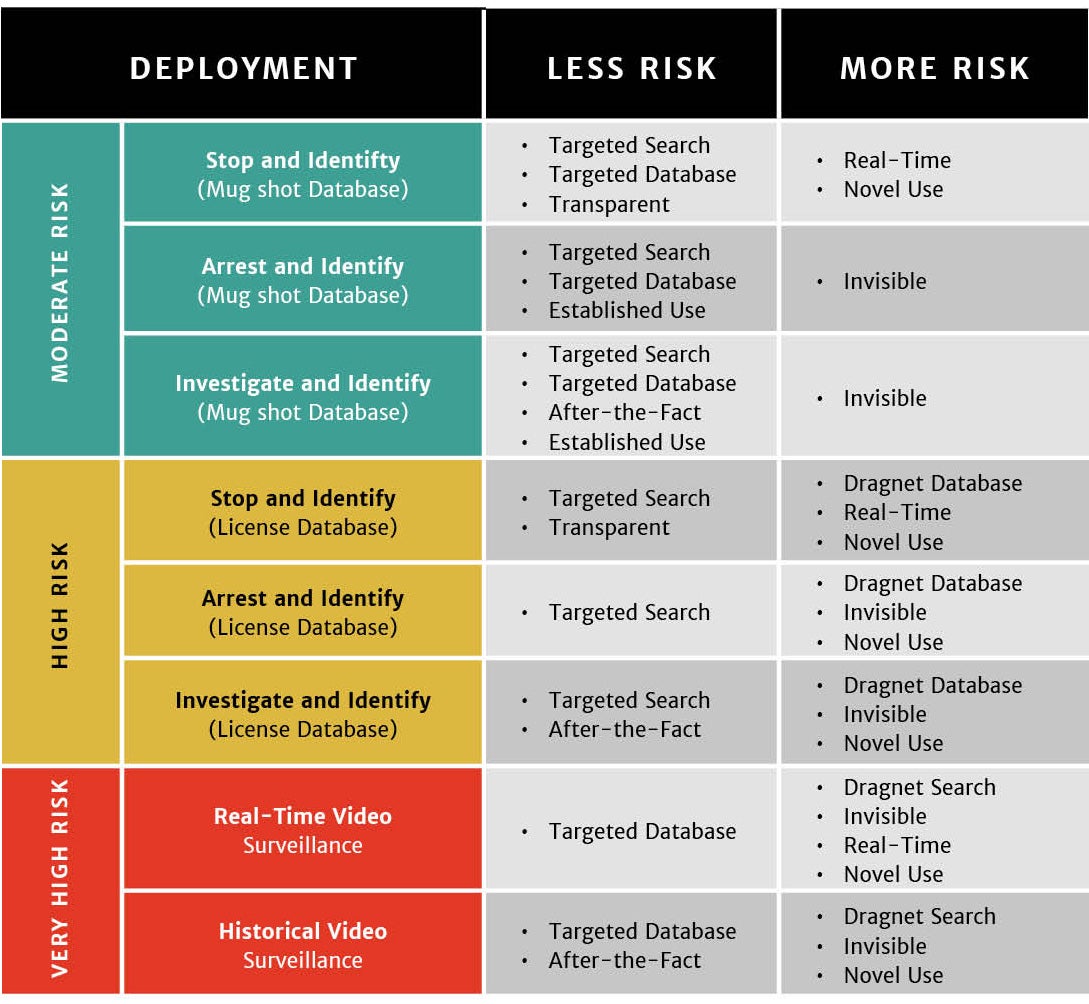

In this section, we categorize police uses of face recognition according to the risks that they create for privacy, civil liberties, and civil rights. Some uses of the technology create new and sensitive risks that may undermine longstanding, legally recognized rights. Other uses are far less controversial and are directly comparable to longstanding police practices. Any regulatory scheme should account for those differences.

The overall risk level of a particular deployment of face recognition will depend on a variety of factors. As this report will explain, it is unclear how the Supreme Court would interpret the Fourth Amendment or First Amendment to apply to law enforcement face recognition; there are no decisions that directly answer these questions.29 In the absence of clear guidance, we can look at general Fourth Amendment and First Amendment principles, social norms, and police practices to identify five risk factors for face recognition. When applied, these factors will suggest different regulatory approaches for different uses of the technology.

- Targeted vs. Dragnet Search. Are searches run on a discrete, targeted basis for individuals suspected of a crime? Or are they continuous, generalized searches on groups of people—or anyone who walks in front of a surveillance camera?

At its core, the Fourth Amendment was intended to prevent generalized, suspicionless searches. The Fourth Amendment was inspired by the British Crown’s use of general warrants, also known as Writs of Assistance, that entitled officials to search the homes of any colonial resident.30 This is why the Amendment requires that warrants be issued only upon a showing of probable cause and a particularized description of who or what will be searched or seized.31

For its part, the First Amendment does not protect only our right to free speech. It also protects our right to peaceably assemble, to petition our government for a redress of grievances, and to express ourselves anonymously.32 These rights are not total and the cases interpreting them are at times contradictory.33 But police use of face recognition to continuously identify anyone on the street—without individualized suspicion—could chill our basic freedoms of expression and association, particularly when face recognition is used at political protests.

- Targeted vs. Dragnet Database. Are searches run against a mug shot database, or an even smaller watchlist composed of handful of individuals? Or are searches run against driver’s license photo databases that include millions of law-abiding Americans?

Government searches against dragnet databases have been among the most controversial national security and law enforcement policies of the 21st century. Public protest after Edward Snowden’s leaks of classified documents centered on the NSA’s collection of most domestic call records in the U.S. Notably, searches of the data collected were more or less targeted; the call records database was not.34 In 2015, Congress voted to end the program.35

- Transparent vs. Invisible Searches. Is the face recognition search conducted in a manner that is visible to a target? Or is that search intentionally or inadvertently invisible to its target?

While they may stem from legitimate law enforcement necessity, secret searches merit greater scrutiny than public, transparent searches. “It should be obvious that those government searches that proceed in secret have a greater need for judicial intervention and approval than those that do not,” writes Professor Susan Freiwald. As she explains, “[i]nvestigative methods that operate out in the open may be challenged at the time of the search by those who observe it.” You can’t challenge a search that you don’t know about.36

- Real-time vs. After-the-Fact Searches. Does a face recognition search aim to identify or locate someone right now? Or is it run to investigate a person’s past behavior?

Courts have generally applied greater scrutiny—and required either a higher level of individualized suspicion, greater oversight, or both—to searches that track real-time, contemporaneous behavior, as opposed to past conduct. For example, while there is a split in the federal courts as to whether police need probable cause to obtain historical geolocation records, most courts have required warrants for real-time police tracking.37 Similarly, while federal law enforcement officers obtain warrants prior to requesting real-time GPS tracking of a suspect’s phone by a wireless carrier, and prior to using a “Stingray” (also known as a cell-site simulator) which effectively conducts a real-time geolocation search, courts have generally not extended that standard to requests for historical geolocation records.38

- Established vs. Novel Use. Is a face recognition search generally analogous to longstanding fingerprinting practices or modern DNA analysis? Or is it unprecedented?

In 1892, Sir Francis Galton published Finger Prints, a seminal treatise arguing that fingerprints were “an incomparably surer criterion of identity than any other bodily feature.”39 Since then, law enforcement agencies have adopted fingerprint technology for everyday use. A comparable shift occurred in the late 20th Century around forensic DNA analysis. Regulation of face recognition should take note of the existing ways in which biometric technologies are used by American law enforcement.

At the same time, we should not put precedent on a pedestal. As Justice Scalia noted in his dissent in Maryland v. King, a case that explored the use of biometrics in modern policing, “[t]he great expansion in fingerprinting came before the modern era of Fourth Amendment jurisprudence, and so [the Supreme Court was] never asked to decide the legitimacy of the practice.”40 A specific use of biometrics may be old, but that doesn’t mean it’s legal.

- 29. See below Sections Findings: Fourth Amendment and Findings: First Amendment for a discussion on the Fourth Amendment and First Amendment implications of law enforcement face recognition.

- 30. See William J. Cuddihy, The Fourth Amendment: Origins and Original Meaning 602–1791 537–548 (2009).

- 31. See U.S. Const. amend. IV.

- 32. U.S. Const. amend. I; see generally NAACP v. Alabama ex rel. Patterson, 357 U.S. 449 (1958); Talley v. California, 362 U.S. 60 (1960); McIntyre v. Ohio Elections Comm’n, 514 U.S. 334 (1995).

- 33. See below Findings: Free Speech.

- 34. See generally Privacy and Civil Liberties Oversight Board, Report on the Telephone Records Program Conducted Under Section 215 of the USA PATRIOT Act and on the Operations of the Foreign Intelligence Surveillance Court 21-37 (Jan. 23 2014). A search of a telephone number within the database required one of 22 NSA officials to find that there is a “reasonable, articulable suspicion” that the number is associated with terrorism. Id. at 8–9. However, the NSA did not comply with this requirement for a number of years. See Memorandum Opinion, Foreign Intelligence Surveillance Court (2009) (Judge Bates) at 16 n. 14, https://assets.documentcloud.org/documents/775818/fisc-opinion-unconstitutional-surveillance-0.pdf (“Contrary to the government’s repeated assurances, NSA had been routinely running queries of the metadata using querying terms that did not meet the required standards for querying.”).

- 35. See Ellen Nakashima, NSA’s bulk collection of Americans’ phone records ends Sunday, Washington Post (Nov. 27, 2015), https://www.washingtonpost.com/world/national-security/nsas-bulk-collection-of-americans-phone-records-ends-sunday/2015/11/27/75dc62e2-9546-11e5-a2d6-f57908580b1f_story.html.

- 36. See Susan Freiwald, First Principles of Communications Privacy, 2007 Stan. Tech. L. Rev. 3, ¶62 (2007).

- 37. See, e.g., Tracey v. State, 152 So.3d 504, 515 (Fla. 2014) (“[T]he federal courts are in some disagreement as to whether probable cause or simply specific and articulable facts are required for authorization to access [historical cell-site location information].”); United States v. Espudo, 954 F. Supp. 2d 1029, 1038–39 (S.D. Cal. 2013) (noting that a significant majority of courts “has found that real-time cell site location data is not obtainable on a showing less than probable cause.”).

- 38. See Geolocation Technology and Privacy: Before the H. Comm. on Oversight and Gov’t Reform, 114th Cong. 2–4 (2016) (statement of Richard Downing, Acting Deputy Assistant Attorney General, Department of Justice) (explaining the practice of obtaining warrants prior to requesting real-time GPS records from wireless carriers, the policy of obtaining warrants prior to use of cell-site simulators, and the practice of obtaining historical cell-site location records upon a lower showing of “specific and articulable facts” that records are sought are relevant and material to an ongoing criminal investigation).

- 39. Francis Galton, Finger Prints 2 (1892).

- 40. Maryland v. King, 133 S. Ct. 1958, 1988 (2013) (Scalia, J., dissenting) (citing United States v. Kincade, 379 F.3d 813, 874 (9th Cir. 2004)).

Applying these criteria to the most common police uses of face recognition, three categories of deployments begin to emerge:

- Moderate Risk Deployments are more targeted than other uses and generally resemble existing police use of biometrics.

- High Risk Deployments involve the unprecedented use of dragnet biometric databases of law-abiding Americans.

- Very High Risk Deployments apply continuous face recognition searches to video feeds from surveillance footage and police-worn body cameras, creating profound problems for privacy and civil liberties.

These categories are summarized in Figure 4 and explained below. Note that not every criterion maps neatly onto a particular deployment.

The primary characteristic of moderate risk deployments is the combination of a targeted search with a relatively targeted database.

When a police officer uses face recognition to identify someone during a lawful stop (Stop and Identify), when someone is enrolled and searched against a face recognition database after an arrest (Arrest and Identify), or when police departments or the FBI use face recognition systems to identify a specific criminal suspect captured by a surveillance camera (Investigate and Identify), they are conducting a targeted search pursuant to an particularized suspicion—and adhering to a basic Fourth Amendment standard.

Mug shot databases are not entirely “targeted.” They’re not limited to individuals charged with felonies or other serious crimes, and many of them include people like Chris Wilson—people who had charges dismissed or dropped, who were never charged in the first place, or who were found innocent of those charges. In the FBI face recognition database (NGI-IPS), for example, over half of all arrest records fail to indicate a final disposition.41 The failure of mug shot databases to separate the innocent from the guilty—and their inclusion of people arrested for peaceful, civil disobedience—are serious problems that must be addressed. That said, systems that search against mug shots are unquestionably more targeted than systems that search against a state driver’s license and ID photo database.

- 41. Ellen Nakashima, FBI wants to exempt its huge fingerprint and photo database from privacy protections, Washington Post (June 1, 2016) (“According to figures supplied by the FBI, 43 percent of all federal arrests and 52 percent of all state arrests—or 51 percent of all arrests in NGI—lack final dispositions, such as whether a person has been convicted or even charged.”)

Two of the three moderate risk deployments—while invisible to the search subject—mirror longstanding police practices. The enrollment and search of mug shots in face recognition databases (Arrest and Identify) parallels the decades-old practice of fingerprinting arrestees during booking. Similarly, using face recognition to compare the face of a bank robber—captured by a security camera—to a database of mug shots (Investigate and Identify) is clearly comparable to the analysis of latent fingerprints at crime scenes.

The other lower risk deployment, Stop and Identify, is effectively novel.42 It is also necessarily conducted in close to real-time. On the other hand, a Stop and Identify search is the only use of face recognition that is somewhat transparent. When an officer stops you and asks to take your picture, you may not know that he’s about to use face recognition—but it certainly raises questions.43 The vast majority of face recognition searches are effectively invisible.44

- 42. Police use of field fingerprint identification began in 2002, around the time when the first police face recognition systems were deployed. See Minnesota police test handheld fingerprint reader, Associated Press (Aug. 17, 2009), http://usatoday30.usatoday.com/tech/news/techinnovations/2004-08-17-mobile-printing_x.htm.

- 43. Field identifications have triggered some of the few community complaints about face recognition reported in national press. See Timothy Williams, Facial Recognition Moves from Overseas Wars to Local Police, N.Y. Times (Aug. 12, 2015); Ali Winston, Facial recognition, once a battlefield tool, lands in San Diego County, Center for Investigative Reporting (Nov. 7, 2013).

- 44. See Findings: Transparency and Accountability.

High risk deployments are quite similar to moderate risk deployments—except for the databases that they employ. When police or the FBI run face recognition searches against the photos of every driver in a state, they create a virtual line-up of millions of law-abiding Americans—and cross a line that American law enforcement has generally avoided.

Law enforcement officials emphasize that they are merely searching driver’s license photos that people have voluntarily chosen to provide to state government. “Driving is a privilege,” said Sheriff Gualtieri of the Pinellas County Sheriff’s Office.45

People in rural states—and states with voter ID laws—may chafe at the idea that getting a driver’s license is a choice, not a necessity. Most people would also be surprised to learn that by getting a driver’s license, they “volunteer” their photos to a face recognition network searched thousands of times a year for criminal investigations.

That surprise matters: A founding principle of American privacy law is that government data systems should notify people about how their personal information will be used, and that personal data should not be used outside of the “stated purposes of the [government data] system] as reasonably understood by the individual, unless the informed consent of the individual has been explicitly obtained.”46

Most critically, however, by using driver’s license and ID photo repositories as large-scale, biometric law enforcement databases, law enforcement enters controversial, if not uncharted, territory.

- 45. Pinellas County Sheriff's Office, Interview with PCSO Sheriff Bob Gualtieri and Technical Support Specialist Jake Ruberto (July 26, 2016) (notes on file with authors).

- 46. See Dep’t of Health, Education, and Welfare, Records, Computers and the Rights of Citizens 57–58, 61–62 (1973). The HEW committee acknowledged that not all of the FIPPS would apply to criminal intelligence records, but insisted that some of the principles must apply: “We realize that if all of the safeguard requirements were applied to all types of intelligence records, the utility of many intelligence-type records . . . might be greatly weakened . . . It does not follow, however, that there is no need for safeguards . . . The risk of abuse of intelligence records is too great to permit their use without some safeguards to protect the personal privacy and due process interests of individuals.” Id. at 74–75.

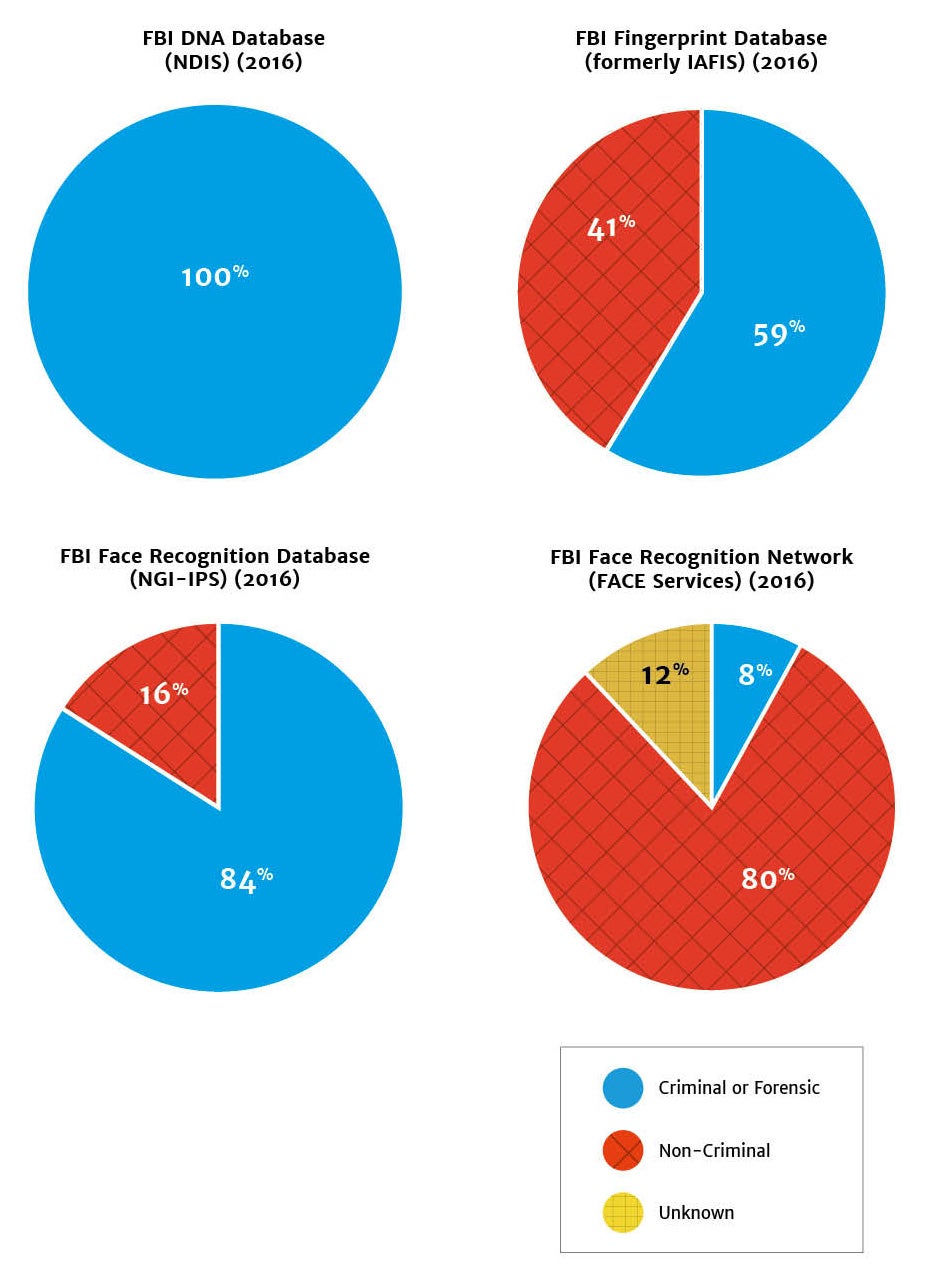

Historically, law enforcement biometric databases have been populated exclusively or primarily by criminal or forensic samples. By federal law, the FBI’s national DNA database, also known as the National DNA Index System, or “NDIS,” is almost exclusively composed of DNA profiles related to criminal arrests or forensic investigations.47 Over time, the FBI’s fingerprint database has come to include non-criminal records—including the fingerprints of immigrants and civil servants. However, as Figure 5 shows, even when one considers the addition of non-criminal fingerprint submissions, the latest figures available suggest that the fingerprints held by the FBI are still primarily drawn from arrestees.

The FBI face recognition unit (FACE Services) shatters this trend. By searching 16 states’ driver’s license databases, American passport photos, and photos from visa applications, the FBI has created a network of databases that is overwhelmingly made up of non-criminal entries. Never before has federal law enforcement created a biometric database—or network of databases—that is primarily made up of law-abiding Americans. Police departments should carefully weigh whether they, too, should cross this threshold.48

- 47. See 42 U.S.C. § 14132(b).

- 48. Note that the perceived “risk” of a deployment may vary by jurisdiction. Many states have passed so-called “stop and identify” laws that require the subject of a legal police stop to produce identification, if available, upon request. See, e.g., Colo. Rev. Stat. § 16-3-103. In those states, a Stop and Identify face recognition search run against driver’s license photos—which automates a process that residents are legally required to comply with—may be less controversial.

Sources: FBI, GAO.49

- 49. The composition of the DNA database (NDIS), the FBI face recognition database (NGI-IPS), and the FBI face recognition unit’s network of databases (FBI FACE Services network) are calculated in terms of biometric samples (i.e. DNA samples or photographs), whereas the FBI fingerprint database composition is calculated in terms of individual persons sampled. For DNA, the Criminal or Forensic category includes all offender profiles (convicted offender, detainee, and legal profiles), arrestee profiles, and forensic profiles, as of July 2016. For fingerprints, the criminal category includes fingerprints that are “submitted as a result of an arrest at the local, state, or Federal level,” whereas the non-criminal category includes fingerprints “submitted electronically by local, state, or Federal agencies for Federal employment, military service, alien registration and naturalization, and personal identification purposes.” For the FBI face recognition database, Criminal or Forensic photos include “photos associated with arrests (i.e. ‘mug shots’),” whereas Non-Criminal Photos include “photos of applicants, employees, licensees, and those in positions of public trust.” Finally, for the FBI face recognition network, the Criminal or Forensic category includes photos of individuals detained by U.S. forces abroad and NGI-IPS criminal photos and Non-Criminal photos include photos from visa applications, driver's licenses, and NGI-IPS civil photos. The Unknown category includes 50 million from four states (Michigan, North Dakota, South Carolina, and Utah) that allow the FACE Services unit to conduct or request searches of driver’s license, mug shot, and correctional photos; unfortunately, the GAO did not disaggregate these state databases into their component parts, preventing us from distinguishing between criminal and non-criminal photos. FBI DNA Database (NDIS) (2016): Federal Bureau of Investigation, U.S. Department of Justice, CODIS - NDIS Statistics, https://www.fbi.gov/services/laboratory/biometric-analysis/codis/ndis-statistics (last visited Sept. 22, 2016) (Total: 15,706,103 DNA profiles); U.S. Department of Justice, Federal Bureau of Investigation, Next Generation Identification Monthly Fact Sheet (Aug. 2016) (identifying 71.9 million criminal subjects and 50.6 million civil subjects); Request for Records Disposition Authority, Fed. Bureau of Investigation, https://www.archives.gov/records-mgmt/rcs/schedules/departments/department-of-justice/rg-0065/n1-065-04-005_sf115.pdf (clarifying definitions of criminal and civil fingerprint files); FBI Face Recognition Database (NGI-IPS) (2015): U.S. Gov’t Accountability Office, GAO-16-267, Face Recognition Technology: FBI Should Better Ensure Privacy and Accuracy 46, Table 3 (May 2016) (Criminal photos: 24.9 million; Civil photos: 4.8 million); Federal Bureau of Investigation, U.S. Department of Justice, Privacy Impact Assessment for the Next Generation (NGI) Interstate Photo System, https://www.fbi.gov/services/records-management/foipa/privacy-impact-assessments/interstate-photo-system (September 2015) (clarifying composition of the Criminal Identity Group and the Civil Identity Group); FBI Face Recognition Network (FACE Services) (2015): U.S. Gov’t Accountability Office, GAO-16-267, Face Recognition Technology: FBI Should Better Ensure Privacy and Accuracy 47-48, Table 4 (May 2016) (Criminal photos 31.6 million; Civil photos: 330.3 million; Unknown photos: 50 million). Note that the IAFIS system has been integrated into the new Next Generation Identification system. See Federal Bureau of Investigation, U.S. Department of Justice, Next Generation Identification, https://www.fbi.gov/services/cjis/fingerprints-and-other-biometrics/ngi (last visited Sept. 22, 2016). However, we have been unable to find statistics on the composition of the fingerprint component of NGI.

Real-time face recognition marks a radical change in American policing—and American conceptions of freedom. With the unfortunate exception of inner-city black communities—where suspicionless police stops are all too common50—most Americans have always been able to walk down the street knowing that police officers will not stop them and demand identification. Real-time, continuous video surveillance changes that. And it does so by making those identifications secret, remote, and potentially pervasive. What’s more, as this report explains, real-time identifications may also be significantly less accurate than identifications in more controlled settings (i.e. Stop and Identify, Arrest and Identify).51

In a city equipped with real-time face recognition, every person who walks by a street surveillance camera—or a police-worn body camera—may have her face searched against a watchlist. Right now, technology likely limits those watchlists to a small number of individuals. Future technology will not have such limits, allowing real-time searches to be run against larger databases of mug shots or even driver’s license photos.52

There is no current analog—in technology or in biometrics—for the kind of surveillance that pervasive, video-based face recognition can provide. Most police geolocation tracking technology tracks a single device, requesting the records for a particular cell phone from a wireless company, or installing a GPS tracking device on a particular car. Exceptions to that trend—like the use of cell-tower “data dumps” and cell-site simulators—generally require either a particularized request to a wireless carrier or the purchase of a special, purpose-built device (i.e., a Stingray). A major city like Chicago may own a handful of Stingrays. It reportedly has access to 10,000 surveillance cameras.53 If cities like Chicago equip their full camera networks with face recognition, they will be able to track someone’s movements retroactively or in real-time, in secret, and by using technology that is not covered by the warrant requirements of existing state geolocation privacy laws.54

- 50. See, e.g., Michelle Alexander, The New Jim Crow: Mass Incarceration In The Age Of Colorblindness 132–133 (2010).

- 51. See Findings: Accuracy.

- 52. Interview with facial recognition company (June 22, 2016) (notes on file with authors); Interview with Anil Jain, University Distinguished Professor, Michigan State University (May 27, 2016) (notes on file with authors).

- 53. See ACLU of Illinois, Chicago’s Video Surveillance Cameras: A Pervasive and Unregulated Threat to Our Privacy, 1 (Feb. 2011), http://www.aclu-il.org/wp-content/uploads/2012/06/Surveillance-Camera-Report1.pdf.

- 54. Modern state geolocation privacy laws tend to regulate the acquisition of information from electronic devices, e.g. mobile phones and wireless carriers. They do not, on their face, regulate face recognition, which does not require access to any device or company database. See, e.g., Utah Code Ann. § 77-23c-102(1)(a)-(b); Cal. Penal Code § 1546.1(a).