Chris was arrested for trespass, a misdemeanor. The Hillsborough County Sheriff’s Office took her to a local station, fingerprinted her, took her mug shot, and released her that evening. She had never been arrested before, and so she was informed that she was eligible for a special diversion program. She paid a fine, did community service, and the charges against her were dropped.

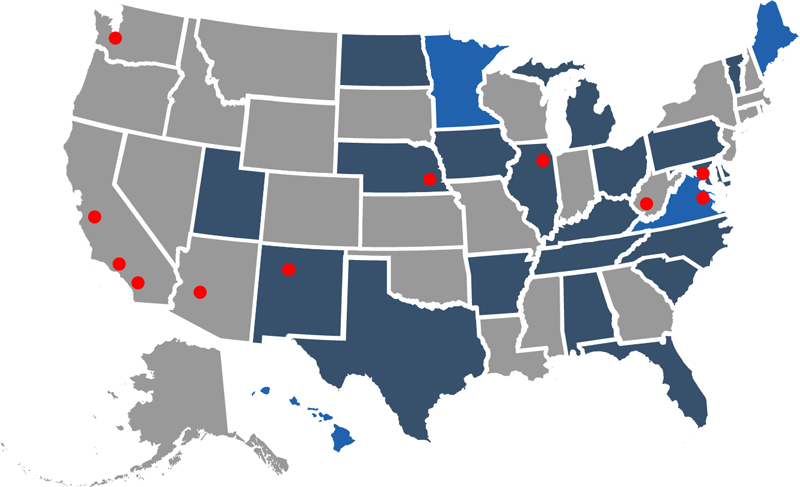

Chris was not told that as a result of her arrest, her mug shot has likely been added to not one, but two separate face recognition databases run by the FBI and the Pinellas County Sheriff’s Office. These two databases alone are searched thousands of times a year by over 200 state, local, and federal law enforcement agencies.

The next time Chris participates in a protest, the police won’t need to ask her for her name in order to identify her. They won’t need to talk to her at all. They only need to take her photo. FBI co-authored research suggests that these systems may be least accurate for African Americans, women, and young people aged 18 to 30. Chris is 26. She is black. Unless she initiates a special court proceeding to expunge her record, she will be enrolled in these databases for the rest of her life.

What happened to Chris doesn’t affect only activists: Increasingly, law enforcement face recognition systems also search state driver’s license and ID photo databases. In this way, roughly one out of every two American adults (48%) has had their photo enrolled in a criminal face recognition network.

They may not know it, but Chris Wilson and over 117 million American adults are now part of a virtual, perpetual line-up. What does this mean for them? What does this mean for our society? Can police use face recognition to identify only suspected criminals—or can they use it to identify anyone they want? Can police use it to identify people participating in protests? How accurate is this technology, and does accuracy vary on the basis of race, gender or age? Can communities debate and vote on the use of this technology? Or is it being rolled out in secret?

FBI and police face recognition systems have been used to catch violent criminals and fugitives. Their value to public safety is real and compelling. But should these systems be used to track Chris Wilson? Should they be used to track you?